Posts in GitOps

Using Docker Compose in GitHub Workflows

- 02 August 2024

GitHub Actions is a powerful tool for automating your CI/CD pipelines. It allows you to run your tests, build your code, and deploy your applications with ease. One of the most common use cases for GitHub Actions is building and testing Docker images. The example below shows how to use Docker Compose in a GitHub Workflow to build and test a Docker image when the docker-compose.test.yml file is present that contains the test configuration.

This run a seperate executable called docker-compose to build and run the tests, but the error message shows that the command is not found. The error message is shown below:

Automate pull-request approval for Dependabot

- 12 July 2024

Dependabot is a service that automatically updates your project dependencies by creating pull requests. It is a great tool to keep your project up-to-date with the latest security patches and bug fixes. However, managing these pull requests can be time-consuming, especially if you have many dependencies. As a result, many teams end up ignoring Dependabot pull requests, which can lead to security vulnerabilities and other issues.

The example configuration below shows that Dependabot will automatically update the Docker base image and GitHub Actions. It ignores major version updates for Amazon Linux, Fedora, Oracle Linux, and Rocky Linux reducing the noise in the pull requests.

How to use GitHub Actions to automatically upload to GitHub Wiki

- 02 December 2023

GitHub is a great platform for hosting open source projects. It provides a lot of features for free, including a wiki for documentation. However, the wiki is primarily designed to be edited through the web interface. This is not ideal for a lot of reasons as it makes it difficult to track changes from the repository. But there is a way to automatically upload to the wiki using GitHub Actions as GitHub provides a way to checkout the wiki as a separate repository.

The first step is to create a GitHub Action that will upload the wiki. This can be done by creating a new file in the .github/workflows directory. The following example shows how to create a GitHub Action that will upload the wiki on every push to the master branch and on every change to the .github/workflows/wiki.yml file or the wiki directory.

Using environment variables in a devcontainer

- 04 September 2023

Hardcoded variables are never a good idea and one solution is the update the during deployment with Ansible for example. Another option is to set read those variables from the environment and maintaining them via systemd via a separate environment file or as part of the container deployment.

The example below is how Django reads the environment variables to configure the database connection to Postgresql. This way the application can easily be configured as is described in Environment variables set by systemd and the application itself never has to be modified or redeployed.

Reporting Flake8 finding as GitHub Annotations

- 01 September 2023

In Extending GitHub Actions with Annotations the output of GitHub Actions was transformed into Annotations that could be shown in the web interface. The example shows that part for Flake8 which is a framework for different plugins to be combined and scan Python code on common mistakes and improvements. But later in 2023 a plugin was release for Flake8 to generate a report that can be used by GitHub Annotations.

By changing to GitHub Actions workflow file as shown in the example below, then the plugin flake8-github-annotations is installed and the flake8 commands are executed with the option --format github like as with yamllint. The lines to add the probem matcher can also be removed.

Upgrading to Terraform 1.5

- 01 July 2023

Pinning Terraform versions is a good practice to ensure that your infrastructure-as-code (IaC) is always deployed with a known version of Terraform. This is especially important when using Terraform Cloud, as the version of Terraform used to plan and apply changes is not always the same as the version used to develop the IaC. This can lead to unexpected errors and behavior, but this also requires that you keep your Terraform version up-to-date in the different configuration files.

In post Run Terraform within GitHub Codespaces Terraform was installed in the devcontainer using features. To upgrade Terraform, simply update the version number in the devcontainer.json file as shown below.

Run Terraform with GitHub Actions

- 24 June 2023

In previous post Run Terraform within GitHub Codespaces the Terraform environment was setup within GitHub Codespaces. The next step is to run Terraform with GitHub Actions via Terraform Cloud as part of a workflow and scan the Terraform code with KICS is the first step to reduce technical debt as described in the post KICS.

Let’s start with the workflow file .github/workflows/terraform.yml to run a KICS scan to verify the Terraform code. The workflow is triggered on push and pull request on the master branch. The Terraform code is checked out and the KICS scan is executed. The KICS scan is configured to run on the Terraform code in the directory terraform and the results are stored in the directory build. The KICS scan is configured to run on the Terraform platform and the output formats are JSON and SARIF so the results can be processed later. The KICS scan is configured to fail on high and medium severity issues. The KICS scan is configured to not add comments to the pull request and to exclude the query with the ID 1e434b25-8763-4b00-a5ca-ca03b7abbb66 during the scan.

Add issues to projects on GitHub

- 22 March 2023

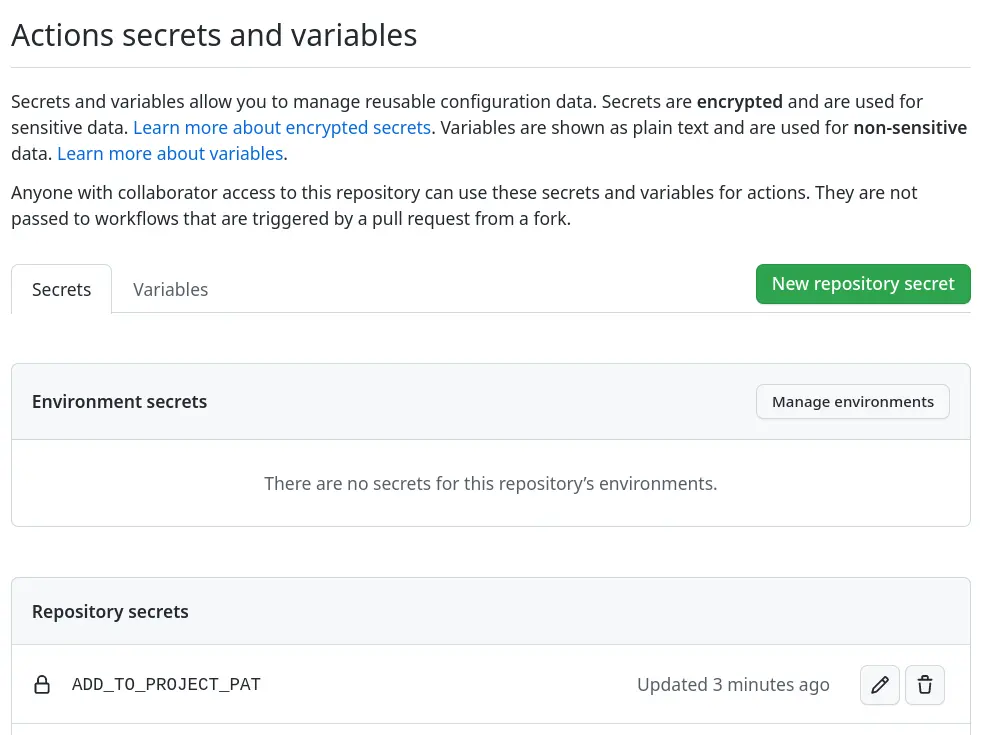

Adding issues to projects on GitHub is a great way to keep track of what needs to be done. This action will add issues to a project board on GitHub when they are opened or labeled. But adding issues to a project board is a manual process. The action actions/add-to-project automates the process of adding issues to a project board.

In the workflows the environment variable ADD_TO_PROJECT_URL is used to define the project board. The project board is defined by the URL of the project board. The URL can be found in the browser when the project board is opened. The URL is defined in the env section of the workflow file.

Label and close stale issues

- 10 February 2023

Issues and pull-requests are part of projects and repositories, but can also be forgotten when they grow out of control. You could search for stale issues manually once in a while, but it is another task on someone’s calendar that has been done. Like with post Add labels to GitHub pull requests this also can be automated. This way the backlog can be kept small so the team working on doesn’t have too much outstanding and untouched work.

Both GitHub App probot/stale and GitHub Action actions/stale are solutions that can be used to scan for stale issues and pull-requests, and label them or even close them. Both solutions have their benefits and drawbacks, but let’s see how they’re configured.

Add labels to GitHub pull requests

- 08 February 2023

Labels on issues and pull requests can make it easier to understand the content, but also make paying attention to what has changed and selecting them easier. If a pull request only has the label terraform for example it indicates that only infrastructure changes are in play.

While multiple GitHub Actions exist the two main solutions are Probot Autolabeler and GitHub Actions Labeler. The first solution is based on a GitHub App that must be installed and have permission to update pull requests, the second solution is based on a workflow that runs a GitHub Action.

Create GitHub issues on a schedule

- 04 February 2023

In post Custom GitHub templates for issues, the first step was made to automate the workflow more by defining issue templates on the organizational level and assigning labels when creating a new issue. A human still needs to create the issue manually while some issues must be created on a schedule to deploy new certificates or run an Ansible playbook to patch servers for example.

Like in post Start using GitHub Dependabot where merge requests were automatically created for updated dependencies, issues can also be created on a schedule. Let’s create a workflow that creates an issue every month for recurring maintenance that must be done.

Custom GitHub templates for issues

- 03 February 2023

Automating workflows reduces the need to think about them, but can also guide new people in the right direction. One of these workflows is creating issues for example. One could use the default templates provided by GitHub to create an issue for a bug or a new feature. Only default templates may not fulfill all requirements needed for a smooth workflow for a project on GitHub.

By default, GitHub has templates for issues and pull requests, but on both organization and repository levels, an override can take place. Meaning that the most specific template set will be used when creating an issue or pull request. First, we will define templates for the whole organization by creating the .github repository within the organization.

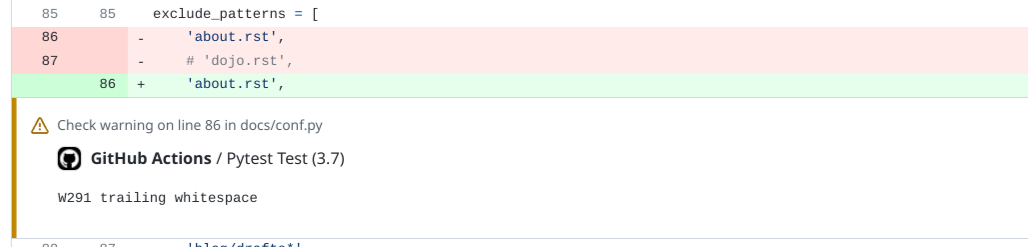

Extending GitHub Actions with Annotations

- 04 January 2023

With the introduction of GitHub Actions automated testing became more accessible and integrated into the pull requests to make it more clear what is being merged and if it checks off all requirements. This makes other services like Dependabot easy to use and keep your code up to date, but these small changes in dependencies for example. Reviewing code or documentation changes can be more difficult when a linter like yamllint or flake8 gives an error or warning as you have to dig into the logs to search for what is wrong.

GitHub Actions also support annotations that can be presented in the web interface to directly see which notifications there are including files and line numbers as shown below. This way feedback from a workflow executed by GitHub Actions is presented in the web interface.

Require a specific Terraform version

- 03 January 2023

HashiCorp offers Terraform Cloud as a service to run Terraform and keep the state instead of having a local copy of the state databases. This is great to make full use of Infrastructure-as-Code tools like Terraform and everyone can run them without losing the correct state. But when setting up a deployment plan a specific version of Terraform has to be selected manually in the webinterface, and you also have to manually increase it when new versions come out.

As the version, for now, can only be set via the webinterface of Terraform Cloud and allow a lot of people to forget to set it to a higher version causing life-cycle-management issues plans do work for repository A, but not for repository B as both plans use a different version of Terraform. While currently now option exists to define the version of Terraform to use when the plan runs, the configuration allows to specify the version of Terraform is required.

Run Terraform within GitHub Codespaces

- 20 August 2022

Using GitHub Codespaces allows you to work on your code from almost any place in the world without an Internet connection. Only the devcontainers powering Codespaces are mended to be short-lived and not contain any credentials. This may pose a challenge when you’re depending on remote services like Terraform Cloud that require an API-token to work properly.

Most devcontainers are following the Microsoft devcontainer template and those are based on Debian which gives you access to a huge repository of packaged software. Only Terraform isn’t part of the standard Debian repository, but HashiCorp provides its own repository that can be added. Let’s start by extending the Dockerfile to add the repository and install the Terraform package as highlighted below.

Scanning with KICS for issues in Terraform

- 28 May 2022

During a recent OWASP Netherlands meetup security scanners were discussed to prevent mistakes and also Checkmarx presented their tool KICS for scanning for security vulnerabilities and configuration errors in Infrastructure-as-Code. Development of KICS goes fast since late 2020 and can catch some common mistakes with known Infrastructure-as-Code definitions like Terraform, Cloudformation, and Ansible for example.

KICS can be used as a standalone scanner as it is written in Go and with GitHub Actions. For now, let’s test it with a Terraform configuration in a GitHub Workflow to see how it works and how useful it is. Maybe in the future, we will test it with Ansible and Docker as well.

Removing invalid state from Terraform

- 23 April 2022

Terraform keeps a cache of state files in the .terraform directory stored in Terraform Cloud so that it can be accessed by everyone in the organization. For existing resources Terraform has to import the state for a defined resource otherwise it will fail. Sometimes the state is invalid or an API will return an unexpected code and Terraform will fail to proceed.

The example below passed the error from the Cloudflare API via Terraform Cloud to the user but does not indicate the error. After verifying the state manually some resource records were already removed from the zone and triggered an 81044 error. But the state was not removed and Terraform Cloud could not find the resource record to remove from the state database.

Migration to Cloudflare Pages

- 23 December 2021

What started as a custom content management system quickly moved to WordPress to improve its maintainability and that solution served its purpose over the years. Having an easy web-based editor to maintain every is a good thing, but sadly also a bad thing. WordPress is a known target for attacks and you have to keep up to not be compromised, but this also means you have to keep up with how WordPress generates its pages otherwise the pages will not be shown correctly.

To reduce time and complexity, another solution was required as deploying WordPress with content every time wasn’t very effective. Most of the content was already in Markdown format to bypass certain limitations the next step came how to deploy them from GitHub. Static website generators like Jekyll, Sphinx, and Pelican came into the picture as they would remove the dependencies for installing code and a database.